Data Center Energy Play With 5x Potential!

The company makes an indispensable product to address critical problems that come with increasing energy demand in commercial sites and transition from fossil fuels to renewables.

Major technological transformations generally take place in three phases:

Picks & shovels.

Infrastructure.

Applications.

Picks & shovels are critical technologies that underlie paradigm shifts. They are basically the “enablers.” For the computer revolution of 1970s, this was logic chips. In the early Internet boom, enablers were fiber-optic transport, routers, network switches, etc. In the age of AI, they are GPUs.

In the pure picks & shovels phase, companies mainly buy those enablers to experiment with the technology and see what they can do with them. Once something breakthrough comes (like GPT 3), the direction of the innovation becomes obvious, and the competition starts.

Intense competition among firms to win the new market leads to massive infrastructure buildout as they need more capacity to serve more customers, make better products, etc..

At this stage, business models based on providing the infrastructure emerge as it’s not feasible for every competitor in the breakthrough product market to build its own infrastructure. There is a high possibility that its investments will be sunk costs. This is why they leave the infrastructure buildout to other businesses and lease the capacity they need.

This is why and how we have cloud companies, for instance.

Before the cloud, every startup needed to have its own servers, so the cost of building and scaling a company was high. After Amazon Web Services launched its cloud business, the cost of building a startup collapsed as startups didn’t need their own servers anymore. Thus, when the general infrastructure buildout ramps up, the cost of applying the technology starts declining, ushering in the next phase—applications.

The application layer is where the technology meets with end users. It’s generally pretty competitive, but big opportunities also exist, as those companies that can create networks or achieve economies of scale and scope can become massive.

In each phase, the companies making the most money are those dominating the previous phase.

This should be intuitive because in each phase, the competitors are using the products of the previous phase as the critical input.

Intel made so much money from the 1980s to 2000s because CPUs were the critical input for box makers; Cisco's revenues grew by 50x from 1992 to 2000 as the global connectivity over the Internet was scaling.

You can more closely see it for the application phase of the internet that started in the early 2000s and is still ongoing. The biggest winners of this phase aren’t the applications themselves, but those providing infrastructure to them, i.e, the cloud service providers.

We will see the same pattern for AI, too.

When it comes to AI, I believe we are in the early innings of the infrastructure phase.

The paradigm-shifting new product (LLMs) has been discovered, and we know GPUs are the critical input enabling LLMs. Thus, the infrastructure buildout has started, and it’s been accelerating for a while. Data centers and the neo-cloud platforms specifically tailored for AI workloads are proliferating at an unprecedented rate.

Nvidia is selling GPUs like hotcakes, and its revenues are growing similarly to that of Cisco’s between 1990 and 2000.

Naturally, everybody is so fixated on the GPUs. Yet, there are other picks & shovels of the AI revolution, and they are being largely ignored.

This is generally what happens during these booms. The main enabling products draw extraordinary attention, but others that are not the main enabler but still solve critical bottlenecks get ignored.

In the 1990s, everybody was focused on routers and switches, but another product was critical for the global internet buildout—fiber optic cables.

Corning was the leading supplier of those cables, and its revenues and thus the stock price followed a very similar pattern to that of Cisco:

I think the same thing is happening now, too.

GPUs are critical for AI models, but there are many other bottlenecks in the system. I think the most critical one is the energy bottleneck.

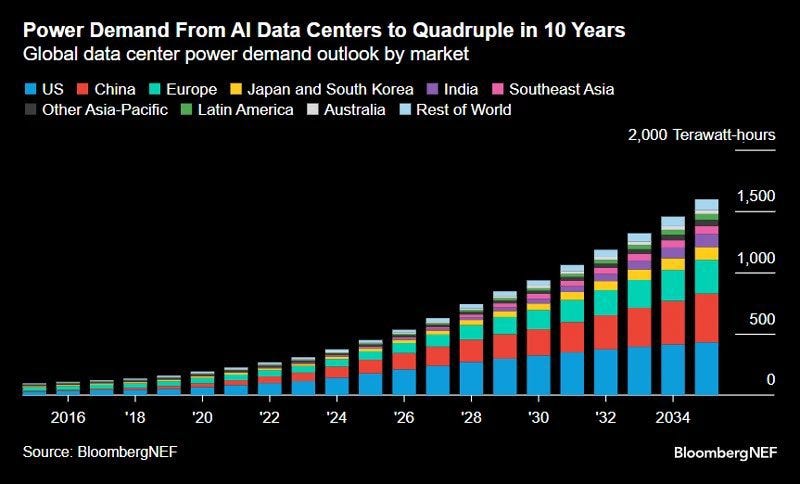

Bloomberg predicts that the global power demand for data centers will quadruple in the next 10 years:

OpenAI predicts that its data centers will consume more energy than India in the next 8 years. We already see these projections coming true. OpenAI started this year with around 230MW operational capacity, and it’ll finish the year with over 2GW.

Skyrocketing energy demand will create significant bottlenecks, as there are several challenges ahead of us:

Our grids should be reimagined to handle the surging demand.

Renewable capacity should be ramped up as we phase out fossil fuels.

Stability and transportation challenges for renewable energy should be addressed.

Thus, energy will be a big bottleneck.

The market is currently focused on just the “generation” bottleneck, as the stock prices of non-revenue companies working on mini-nuclear reactors and fusion are surging. However, the rest of the challenges are being ignored.

Thus, I was looking at this realm for a while to find companies that are addressing critical energy bottlenecks ahead and well-positioned to benefit from the AI-boom.

I think I found one.

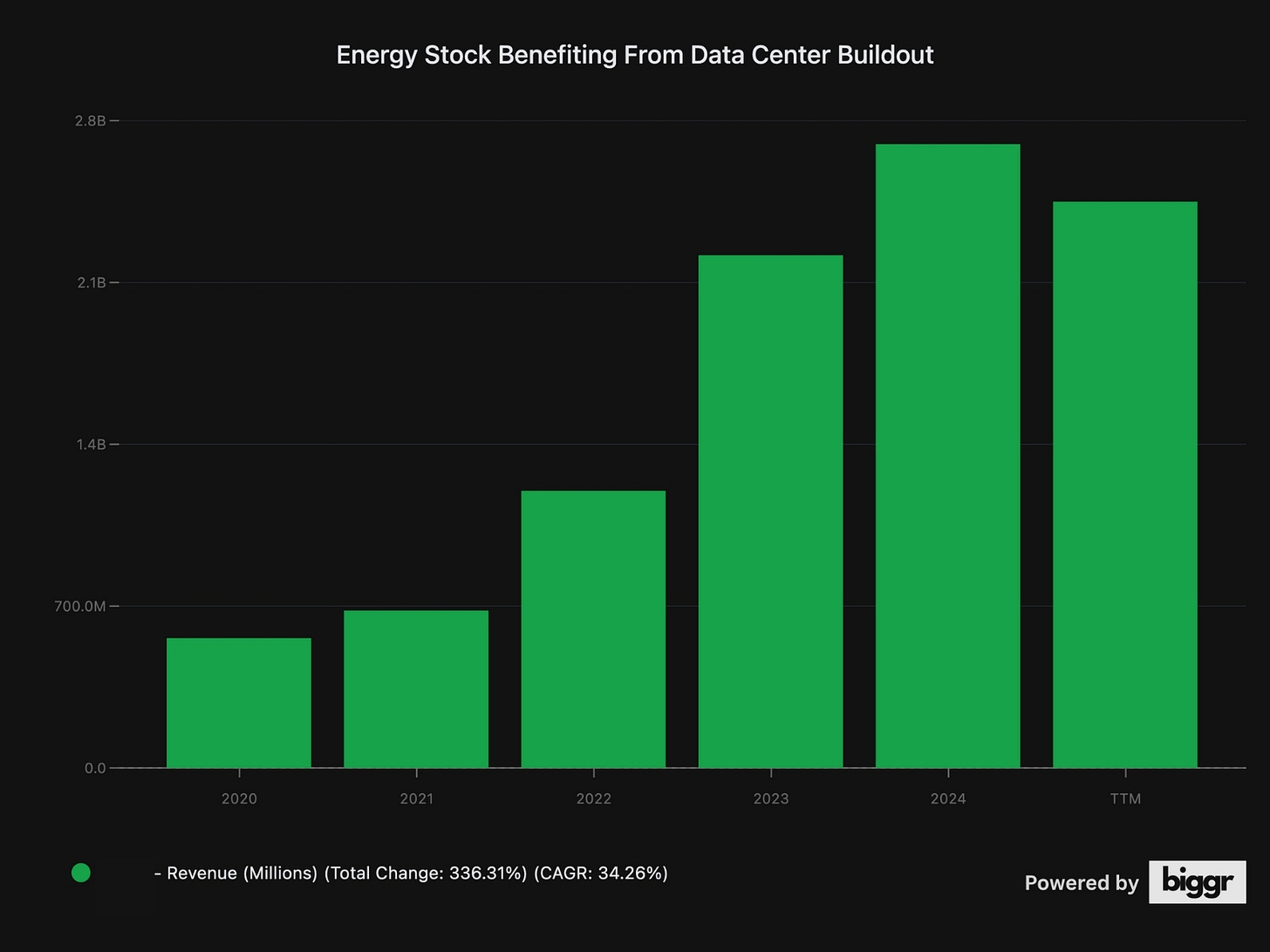

This company is addressing a critical energy bottleneck, and it’s leading its industry. We have already started to see it benefiting from the AI-boom:

Amazon and Google are already its customers, having installed its solutions in their data centers.

It has grown revenues by 36% annually in the last 5 years.

It just became profitable last year.

Yet, despite its market-leading position and amazing growth in the last 5 years, it’s trading at just 1x revenue!

What’s even better is that the business generates significant recurring revenue from after-sales services and a software stack that is an integrated part of its product. They are necessary, especially the software stack, for customers to get the most out of its product.

What’s even better is that it has a dual catalyst ahead. It won’t just benefit from the surging power demand due to the data-center buildout; it’ll also benefit from the general shift toward renewables. I think, even if the AI thesis never existed, it would still have massive tailwinds ahead due to the renewables transition.

It has a path to make 5x in the next 5 years, even assuming conservative growth, industry average net margins, and commodity P/E.

So, let’s cut the introduction and dive deep into this energy play.

🏭 Understanding The Business

AI hit the stage just as when were in the middle of a transition from fossil fuels to renewables. This is not just a voluntary change; it needs to be done as oil will be depleted in the next 50 years, and most other fossil fuels will be used up in 100 years.

It was already hard to achieve this timely and it’s even harder now as AI substantially grows the projected power demand.

This creates a big problem both for the energy grids and data centers that consume energy to power the AI models. One of the most profound of them is intermittency, which means the fluctuation in energy generation.

In electricity grids, the power supply should always match the demand. If it comes short, we get blackouts; if it gets excessive, the frequency of the electricity rises, damaging the equipment.

In traditional power plants working with fossil fuels, nuclear, or water, the operators have control over this. They can reduce or increase power generation by using more/less fuel, increasing/reducing the capacity of the reactor, or opening/closing the vanes.

In renewables, this is not that straightforward. You generate power when nature decides, not you.

Naturally, it’s harder to manage intermittency.

It’s already a big problem, and it gets bigger for data centers. The cost of intermittency is huge for data center customers, especially when running training workloads. Training is extremely power-intensive and continuous, sometimes taking months. An interruption means:

Starting from the last checkpoint (lost time/effort/resources)

Potential damage to all critical equipment, like GPUs.

Delays in the product roadmap.

This company addresses this problem.